MVP application for launching and managing A/B tests

My role: Senior Product Designer.

Team: iOS Team Lead, QA, Frontend, Backend.

Tools: Figma, ProtoPie, Confluence, Jira.

Year: 2023.

1. Summary

We developed a tool to keep track of A/B tests, which centralized the processes of conducting them. As a result, the company got a more transparent and convenient way of testing hypotheses. With its help, we conducted numerous experiments with statistically significant changes in metrics and spent less time preparing for them.

2. About the Project

At Ewa, processes for optimizing A/B testing were started. Together with the iOS Team Lead and analysts, we kicked off the development of our own tool under the working name A/B Toolkit for creating and keeping track of A/B tests. The development was led by our iOS Team Lead, and I took part in changing the testing processes and designed the MVP.

3. Research and Analysis

At the initial stage of the project, we carried out a detailed analysis of the current A/B testing process. During the analysis, we managed to find out the main issues the team was facing, including the inefficiency of some methods and limited resources. Thanks to a thorough research, we also found ways to develop and improve the process, which would allow us to streamline testing and improve its efficiency in the future.

3.1. Current process

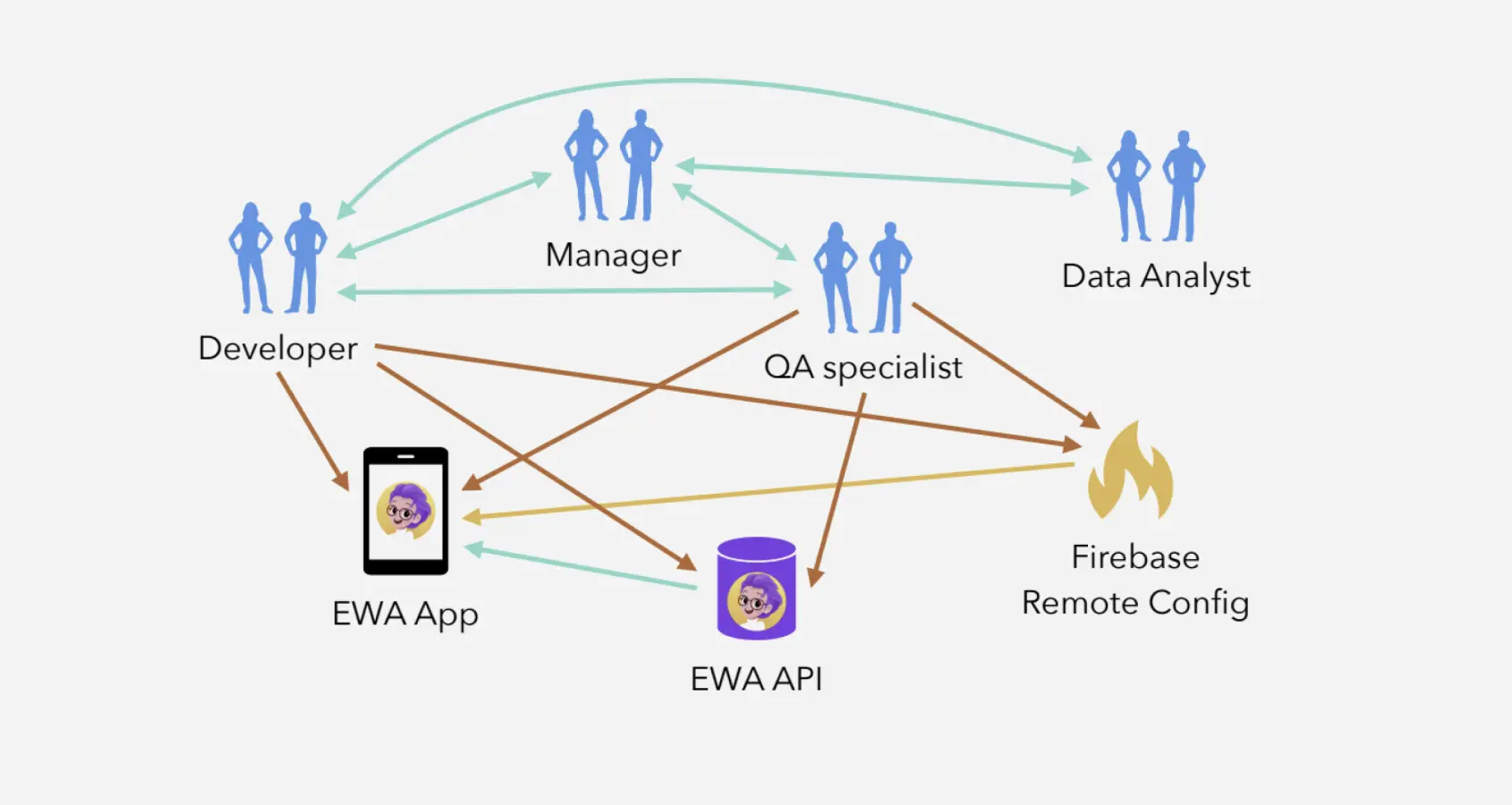

The experiment process involves many participants. Due to communication difficulties, there are problems with determining who is in charge of launching the experiment, which leads to conflicts between managers and developers.

3.1.1 Fragmentation of A/B test sources

Tests are managed by different components: EWA App, EWA API, Firebase Remote Config. This creates difficulties in coordinating and managing tests, as each component has its own features and requires a separate approach.

3.1.2 No change history

Links between process participants (managers, data analysts, developers, quality assurance specialists) do not allow for centralized tracking of changes. This leads to problems with restoring deleted tests and makes it harder to go over test activity for past periods.

3.1.3 High probability of human error

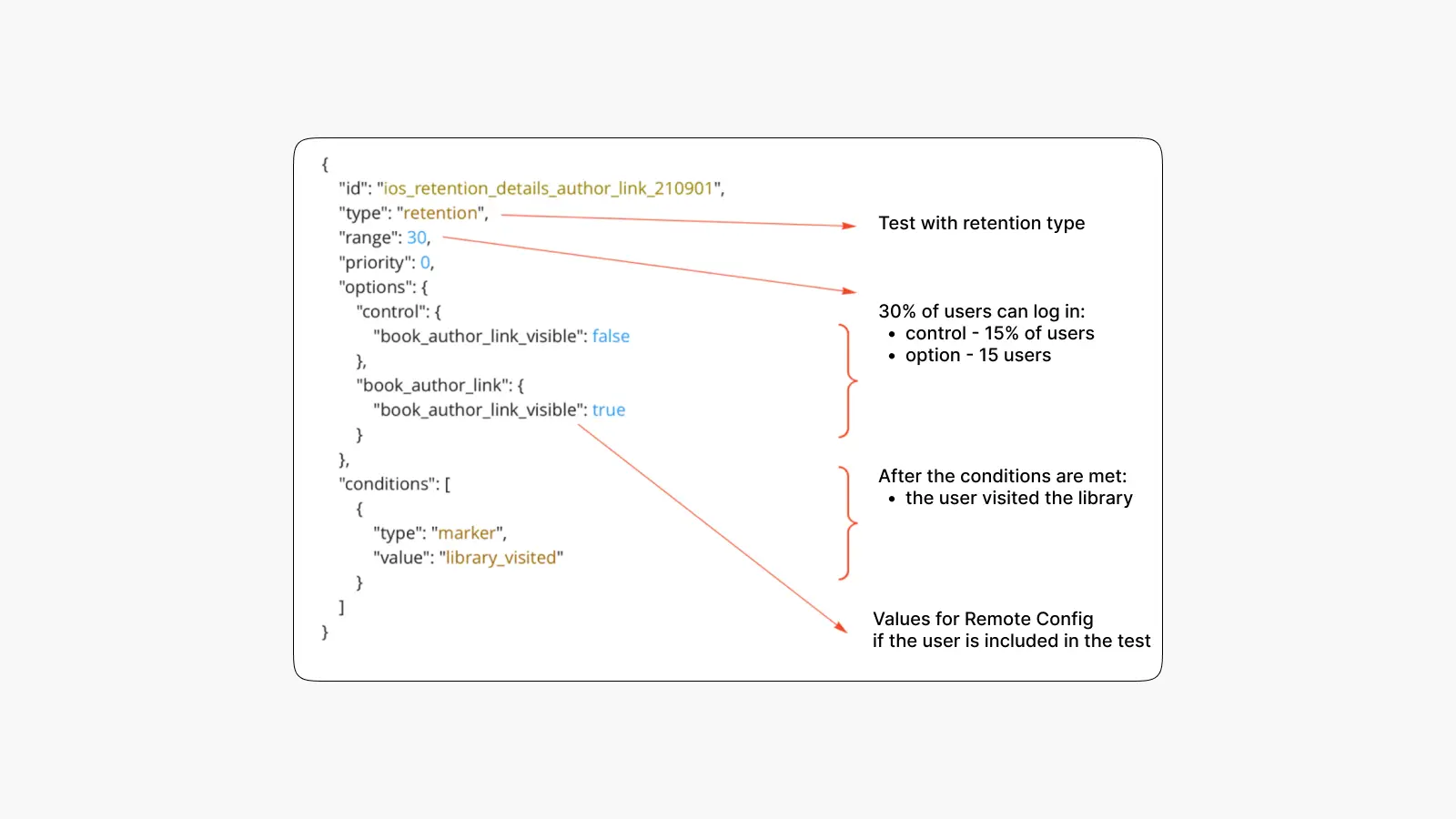

The diversity of participants and components responsible for testing significantly increases the likelihood of errors when entering data and setting configurations. Launching tests often requires manual changes to JSON code, which creates additional risks, as not all employees are well-versed in this language. Errors in the JSON structure can bring tests to a halt and kick users out of tests. As a result, such errors can set the A/B testing process back by 2-3 weeks.

3.1.4 Decentralization and platform differences

Tests are launched and managed through different platforms, such as EWA App and Firebase Remote Config. This requires the teams (especially QA specialists) to account for all the differences, which complicates their work and increases the likelihood of errors.

4. Solution

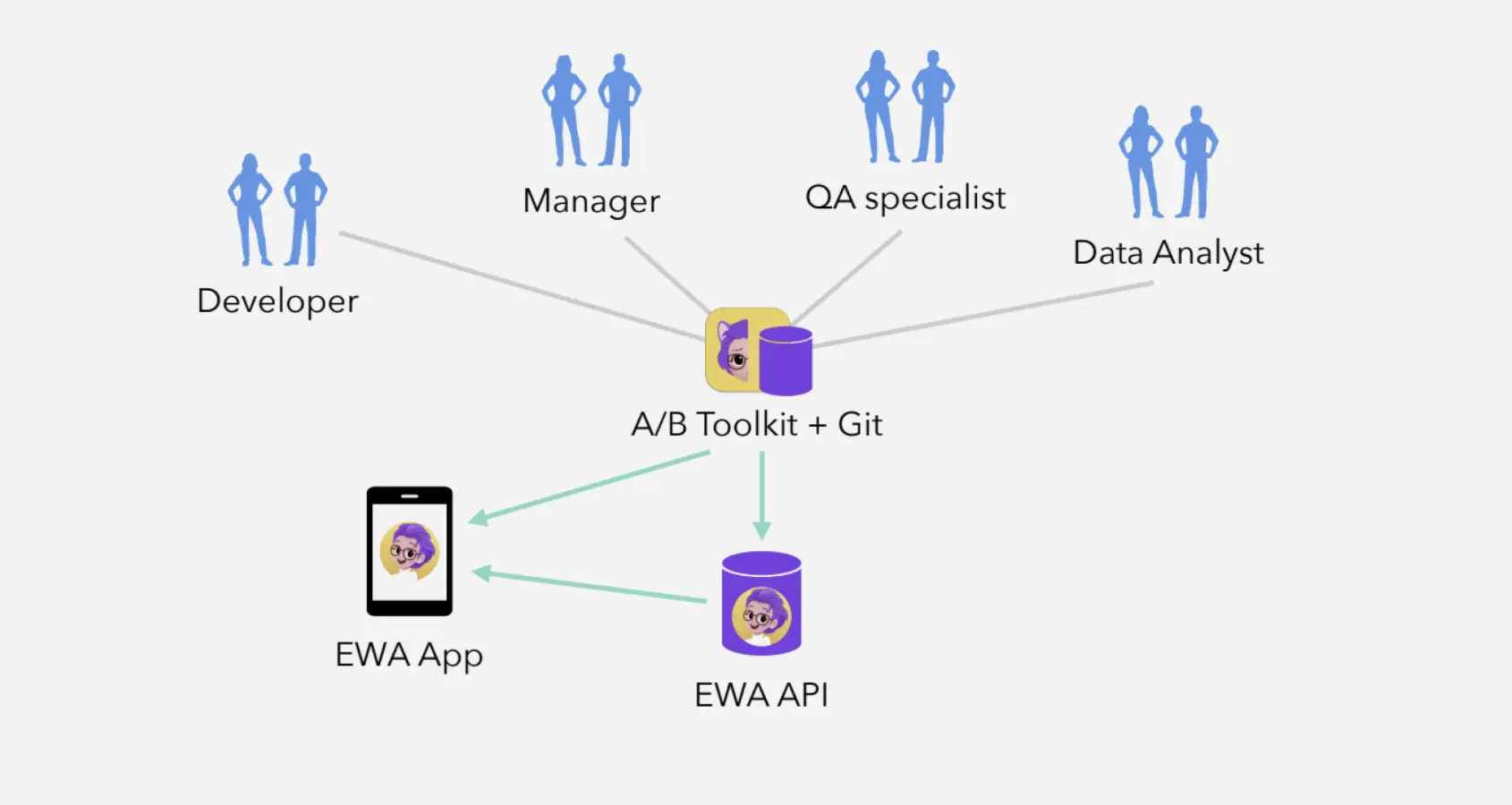

One of the main solutions was the introduction of A/B Toolkit and Git for centralized test management and change history storage in one place. This allowed us to automate the publication of local and cloud tests, as well as notifications of changes through Slack, which significantly reduced the likelihood of human error. Moving from manual editing of JSON files to using UI and data validation before uploading also reduced the number of potential failures. Additionally, Android support was increased and a gradual phasing out of Firebase was started, which improved compatibility and reduced the risk of errors due to platform differences. These measures made the A/B testing process more centralized, automated, and resilient to errors, making the work easier for all project participants.

4.1. Roadmap for changes

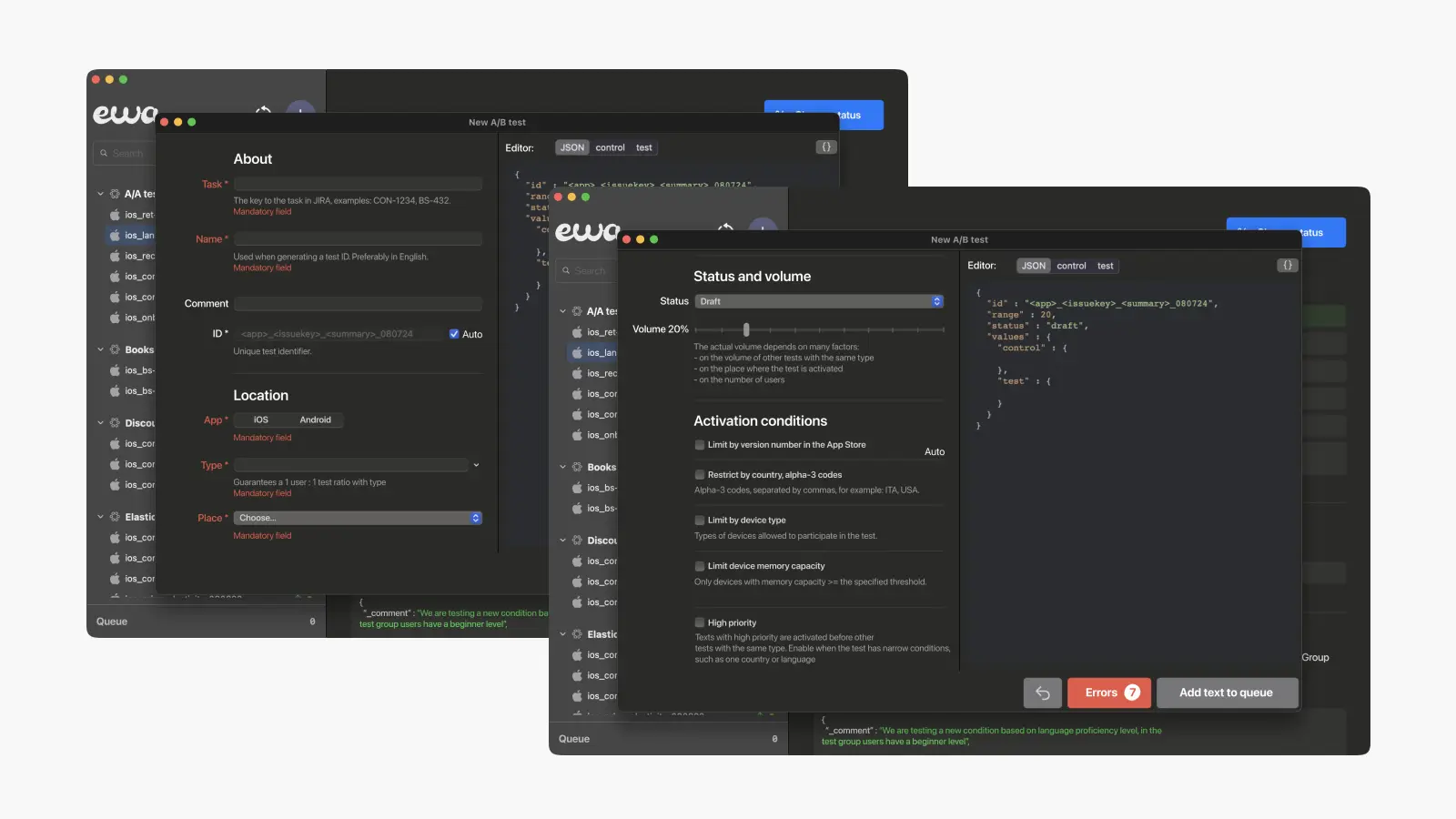

After agreeing, we broke down the process of introducing new methods into several stages. At the first stage, we introduced A/B Toolkit — an application for macOS that centralized the management of local and cloud tests. It automatically uploaded local tests to a new release, and cloud tests — to EWA API. The application sends notifications of all changes to a Slack channel. We also introduced a Git repository for storing action history. Now all changes are made through a graphical interface, instead of manually editing JSON files.

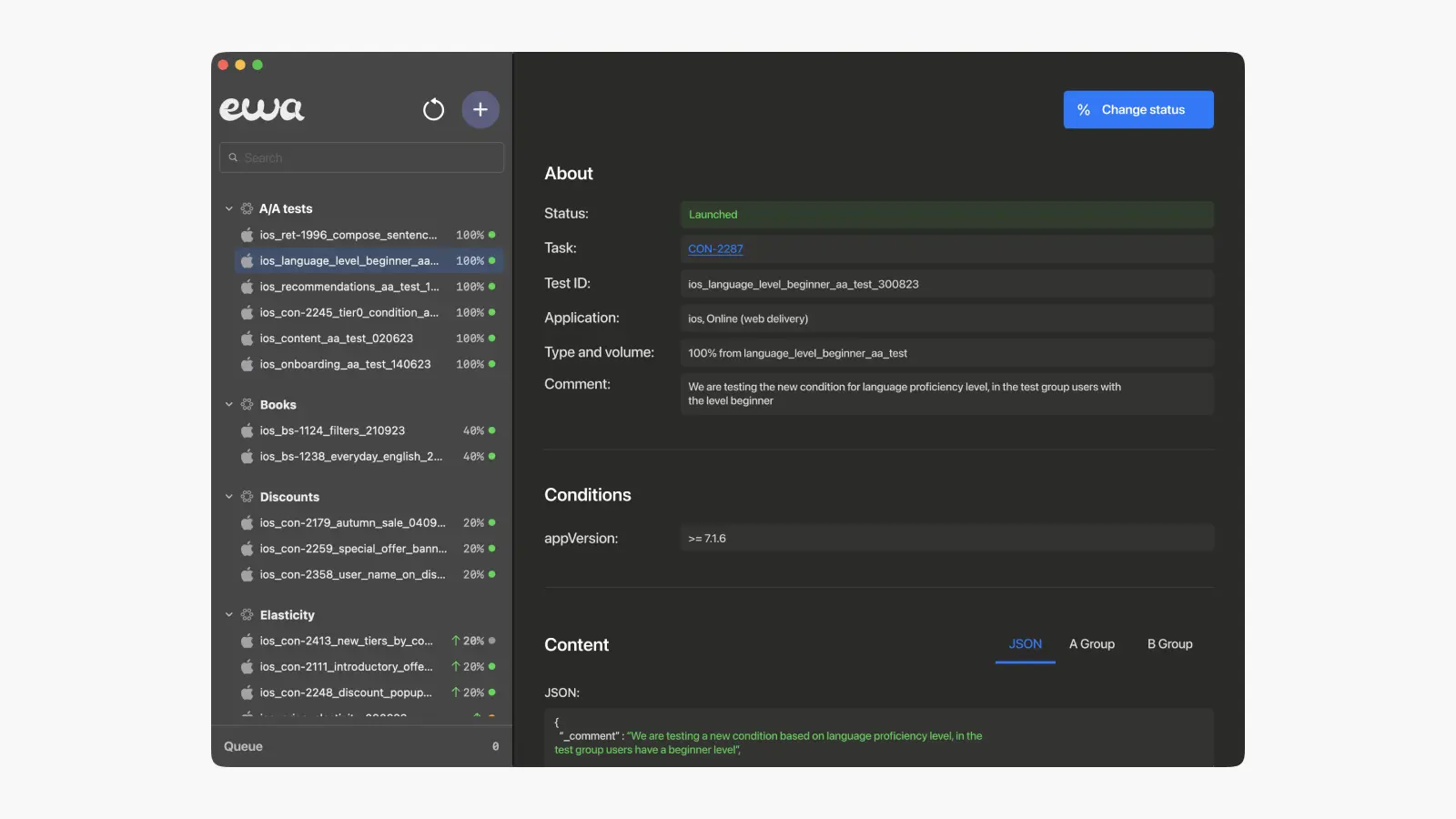

5. Design of A/B Toolkit

We decided to start by developing a native application for macOS for MVP, as this was the most economical option thanks to the availability of a sufficient number of iOS developers in the company. Unfortunately, A/B Toolkit could not be used by colleagues who did not have macOS or iOS devices, but the presence of the first version helped debug the product and collect valuable feedback for further improvement.

5.1. Design v1

After agreeing, we broke down the process of introducing new methods into several stages. At the first stage, we introduced A/B Toolkit — an application for macOS that centralized the management of local and cloud tests. It automatically uploaded local tests to a new release, and cloud tests — to EWA API. The application sends notifications of all changes to a Slack channel. We also introduced a Git repository for storing action history. Now all changes are made through a graphical interface, instead of manually editing JSON files.

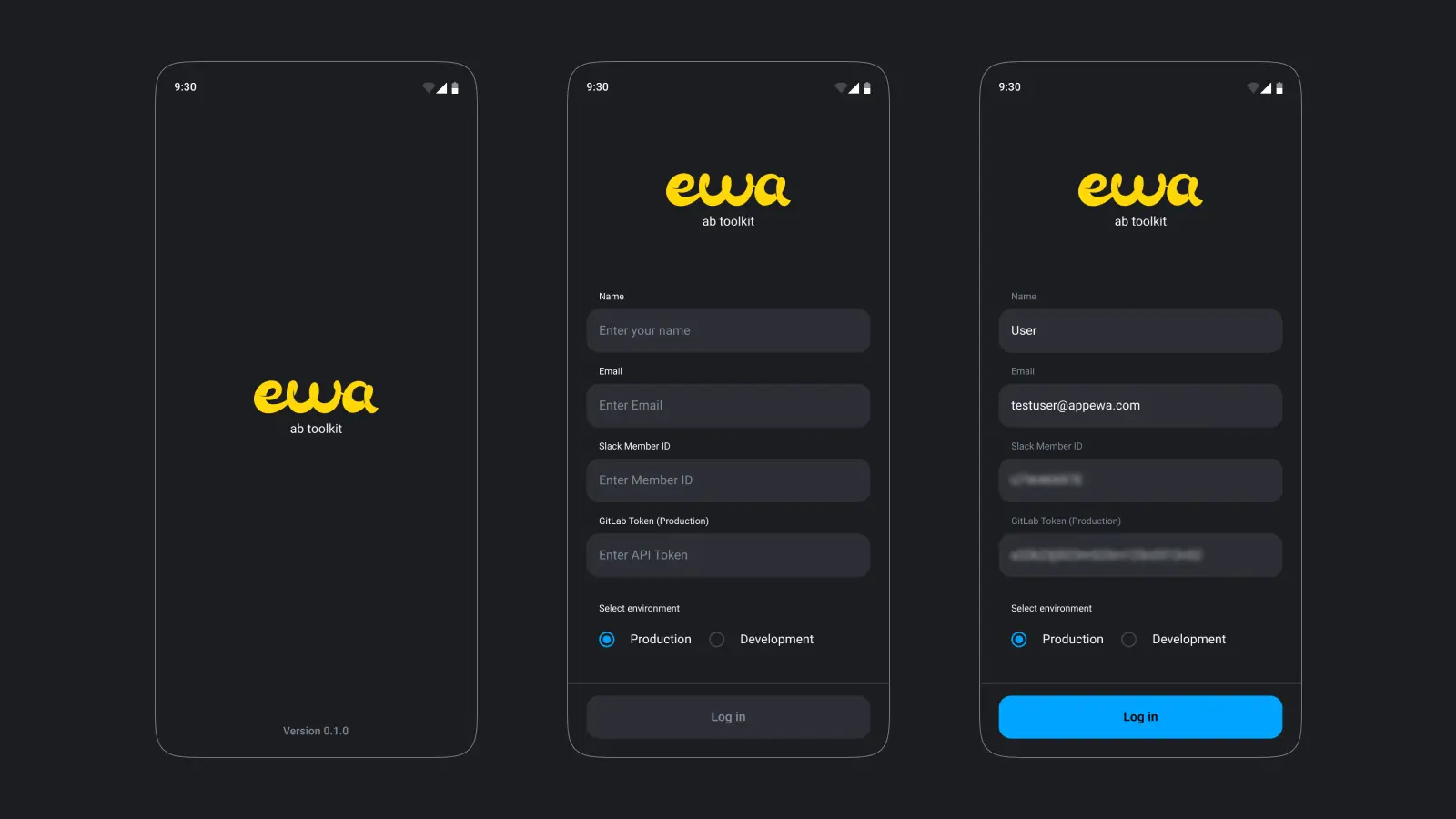

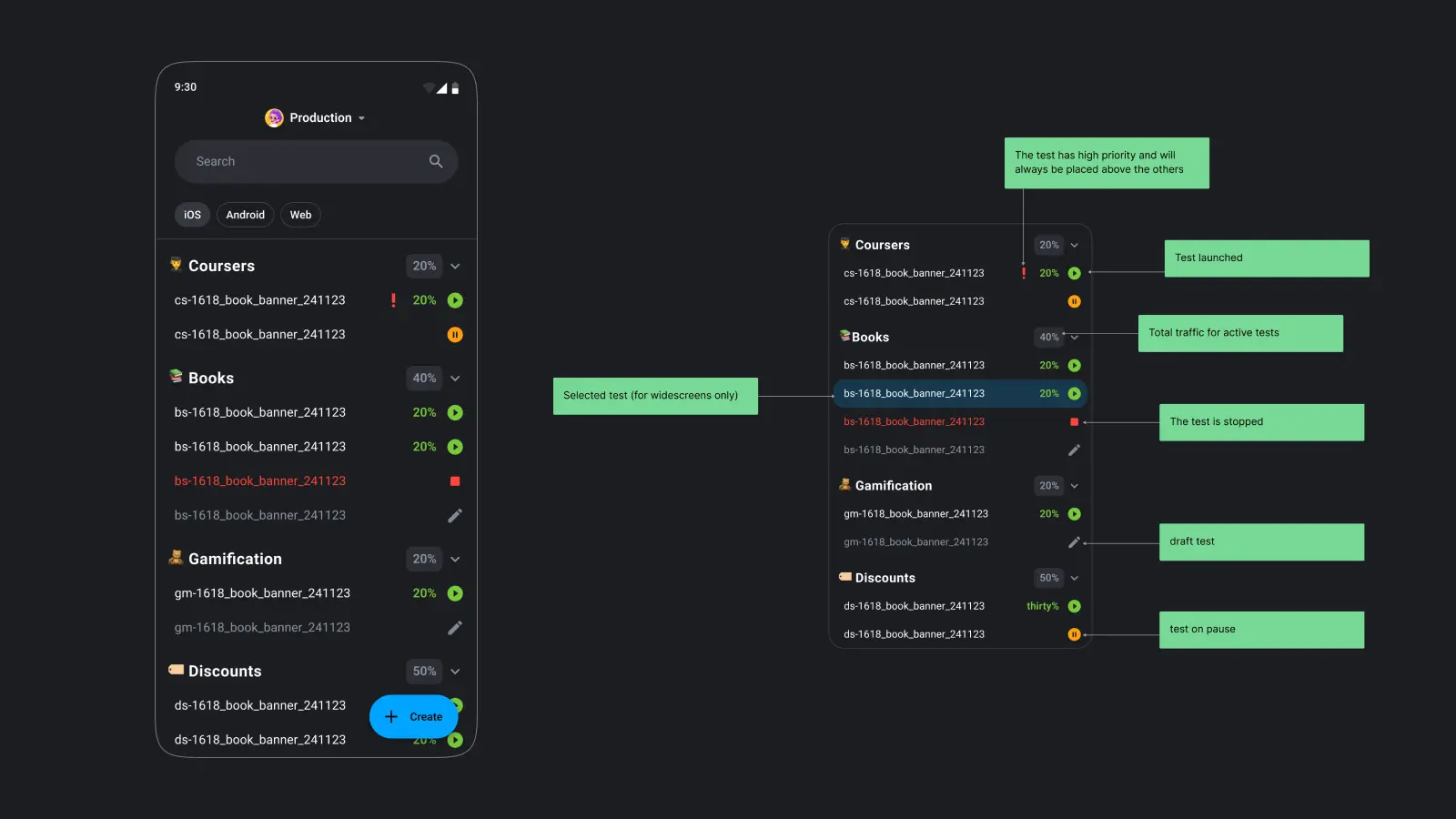

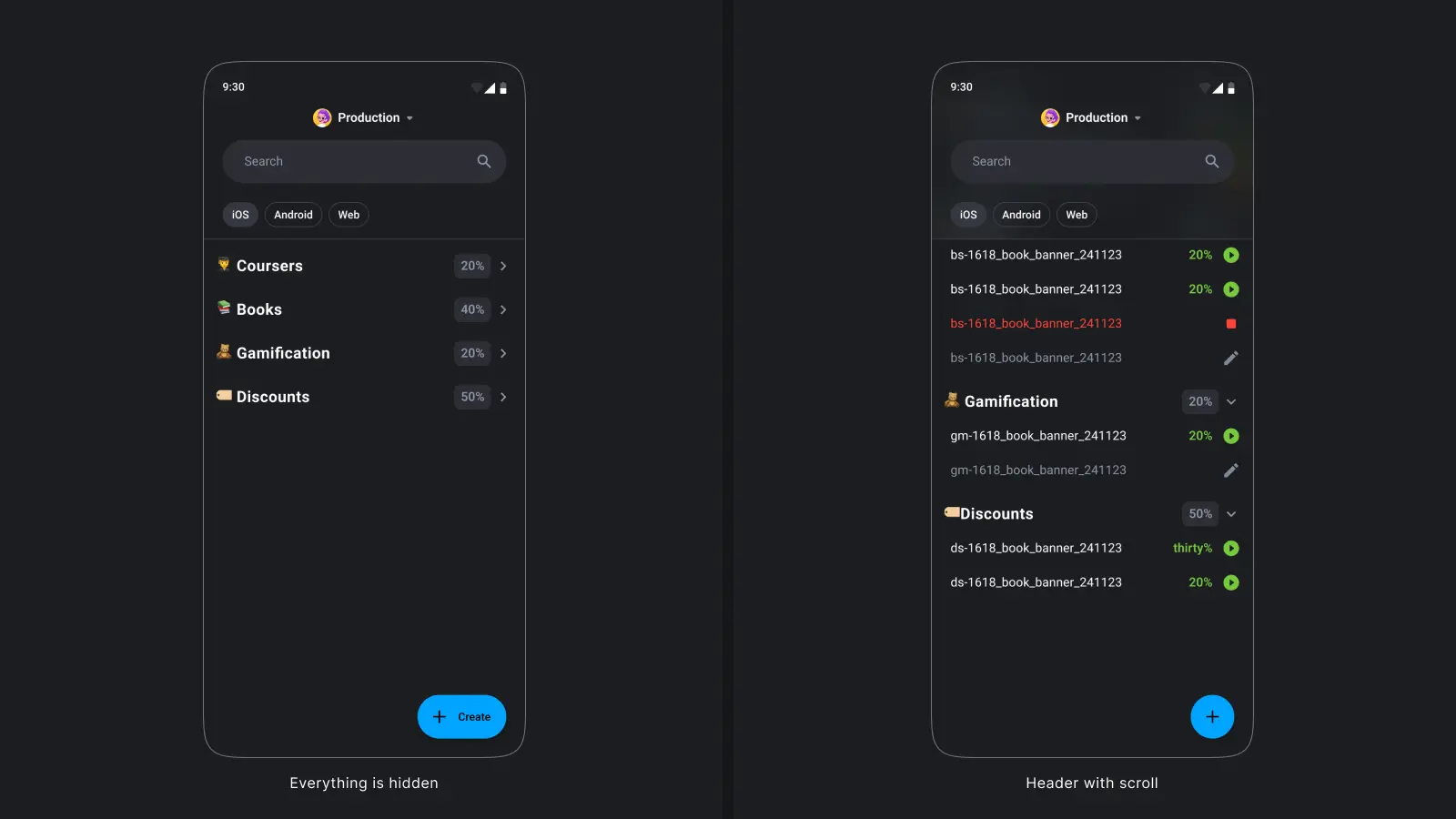

5.2. Design v2

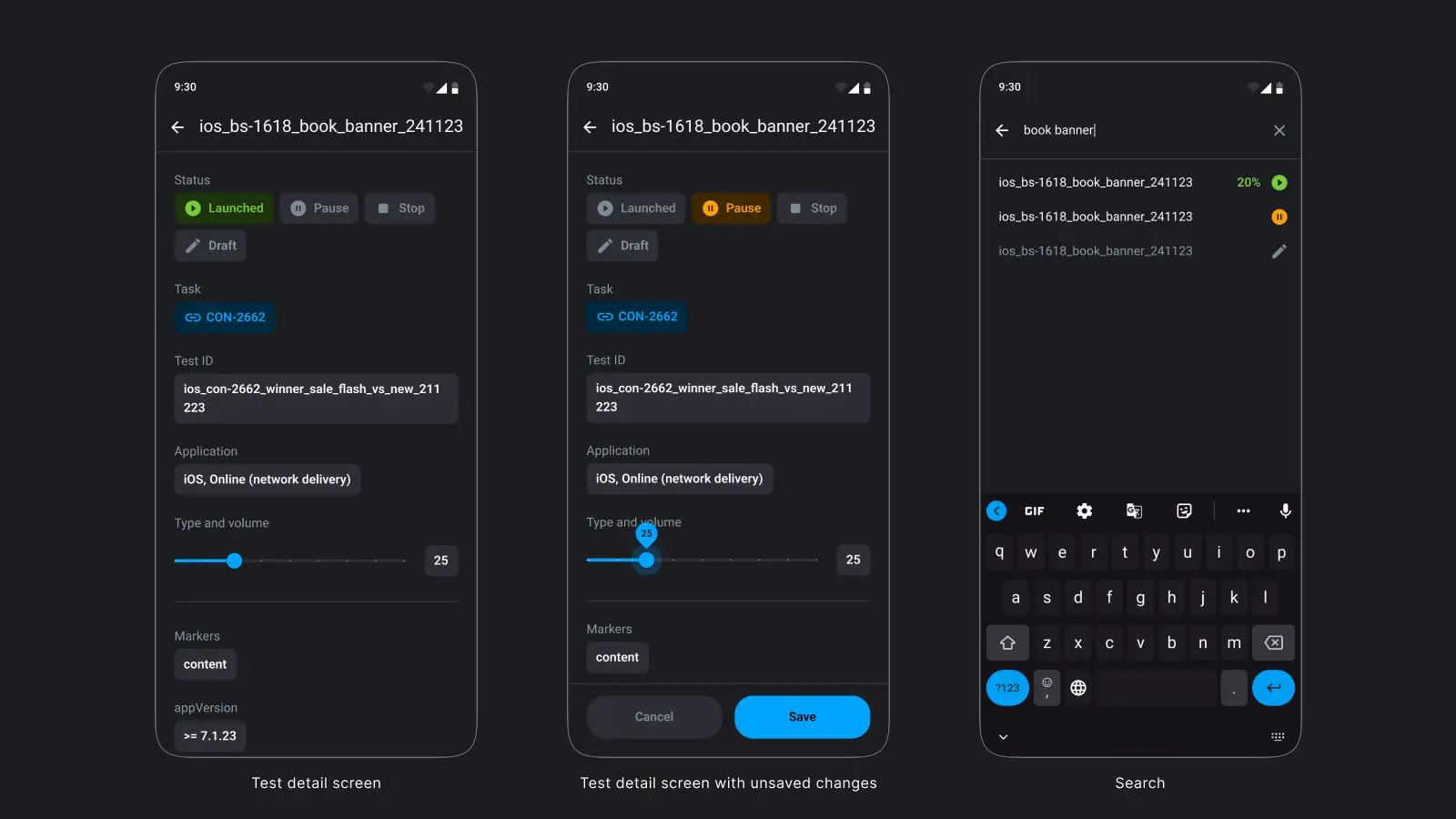

In the second iteration, A/B Toolkit was rewritten in Kotlin, which allowed us to create a cross-platform application that works on any device, regardless of the operating system and type. The application adapts to any screen size and can be used in both desktop and mobile versions.

6. Conclusion

The implementation of A/B Toolkit and changes in the testing processes improved the control and management of A/B tests, reduced errors, and increased team efficiency. Automating the publication of tests and notifications reduced the risk of human error and simplified the testing process. Thanks to these innovations, we conducted numerous successful tests that significantly improved the company's metrics.